It seems like Google has a thing for launching a major algorithm or Search update during the month of October. Perhaps they have learned their lesson from launching core updates in November and December and prior to the Holiday season impacting various merchants and such as the original Florida Update in 2003.

More recently, RankBrain which is a component of Google’s core algorithm that uses machine learning to determine the most relevant results to search engine queries was launched in October of 2015. Now in October 2019, Google has announced what they have been saying is another major Search release with the launch of their BERT algorithm.

There is been a lot of information about BERT since its launch, so we thought we would compile some fundamentals about Google’s BERT algorithm and what it means for Search.

Frequently Asked Questions about Google’s BERT Algorithm

What is BERT?

BERT or Bidirectional Encoder Representations from Transformers is a neural network-based technique for natural language processing pre-training that can be used to help Google better discern the context of words in search queries.

Google has updated algorithm components to better understand natural language processing.

What is a neural network?

Neural networks are a set of algorithms, that are designed to recognize patterns. It’s loosely modelled after the human brain. From a machine learning perspective, “A neural network is a type of machine learning which models itself after the human brain. This creates an artificial neural network that via an algorithm allows the computer to learn by incorporating new data.” – Techradar.com

When did Google launch BERT?

Google announced the launch of BERT on October 25, 2019. Official announcement from Google can be found here: https://www.blog.google/products/search/search-language-understanding-bert/.

How does BERT work?

BERT allows the language model to learn word context based on surrounding words rather than just following the contextual order of the word that immediately precedes or follows it. As Google states, BERT is able to “process words in relation to all the other words in a sentence, rather than one-by-one in order. BERT models can therefore consider the full context of a word by looking at the words that come before and after it—particularly useful for understanding the intent behind search queries.”

What is the impact of BERT?

Google has stated that initially BERT will impact 10% of English Searches in the U.S. However, they will be bringing it to more languages and locales over time. So, if you are in Canada and use Google.ca for your search you may not notice any difference at this time.

With the focus on Natural Language Processing, the BERT algorithm will be particularly useful for longer, more conversational queries, or searches where prepositions like “for” and “to” matter a lot to the meaning, Search will be able to understand the context of the words in your query.

Google has stated that BERT has been applied to Featured Snippets as well.

Will BERT be applied to all searches?

No, Google suggests that BERT will impact one in ten searches based on English Search activity in the U.S. BERT may not be required for shorter search queries or for branded search terms or where the intent is quite conspicuous.

Do you have an example of BERT in action?

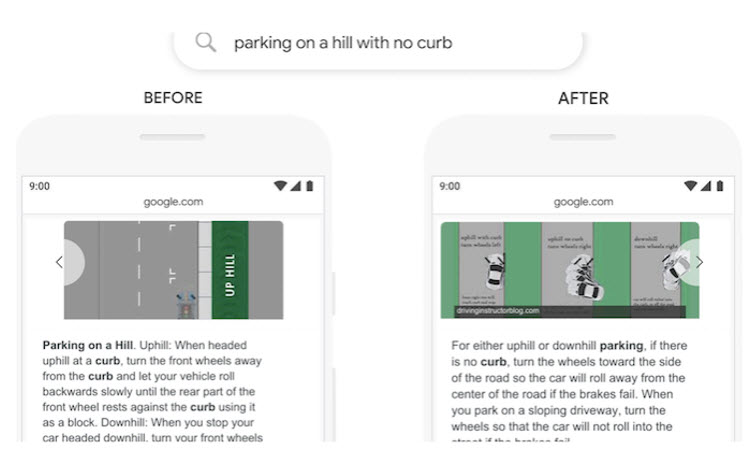

Here is one of the examples that Google is using to illustrate the impact of BERT. If you searched for “parking on a hill with no curb”, Google used to place too much emphasis on the word “curb” and not enough emphasis on the word “no”.

So the BERT algorithm update makes some results more relevant for users depending on the intent of their search query.

Why did Google launch the BERT algorithm?

Simply put, Google is looking to apply BERT to make Search better (and more relevant) for people across the world. It is evident that the BERT algorithm is meant to be applied to more long-tail search terms to help deliver the most relevant result based on the searcher’s intent. Language understanding remains an ongoing challenge for Google and all search engines, so BERT, which may run in conjunction with other algorithm components such as RankBrain, is intended to help deliver the most relevant search results based on a user’s query.

Is BERT the same as RankBrain?

No, they are two separate algorithm components. RankBrain works to understand queries based in part on historical search activity while BERT looks at queries from a bidirectional as opposed to linear word relationships. BERT has the ability to train language models based on the entire set of words in a sentence or query (bidirectional training) rather than the traditional way of training on the ordered sequence of words (left-to-right or combined left-to-right and right-to-left). Google may use multiple methods to understand a query, meaning that BERT could be applied on its own, alongside other Google algorithms (such as RankBrain).

Do I need to optimize for BERT?

Well not exactly. There is no way to really optimize for the BERT algorithm. Always create your content with your user in mind and work to serve up the information that they are looking for. Be clear and concise in your messaging and you should be able to retain or gain visibility in Google Search Results.

Optimize for E.A.T. (Expertise, Authority and Trust). Today’s content optimization is more about answering a searcher’s question as quick as possible and providing as much value as possible compared to the other competing sources for that topic.

One of my mantras when it comes to content creation is to provide the RIGHT content in the RIGHT place that is available at the RIGHT time. Prepare smart content people.

Where can I find more information about BERT?

There has been a lot written about BERT in the mere week and a half since it has launched. So look for additional articles and insight. For now here are some additional resources about the BERT algorithm.

- Understanding searches better than ever before – Google Blog

- BERT Explained: State of the art language model for NLP – Toward Data Science

- FAQ: All about the BERT algorithm in Google search – Search Engine Land

- Google BERT Updates impacts 10% Queries & Has Been Rolling Out All Week – Search Engine Roundtable

Google has stated that this is one of their biggest steps in Search in the past five years. This could very well be the largest steps forward in Search that we have seen. A.I. and machine learning to deliver more relevant information. BERT will assist Google to understand queries that are more human like, queries and content that are more natural language and conversational based. I’ve discussed the importance of voice search in the past, BERT just seems like a natural progression in Google’s attempt to match relevant results with the user’s search intent in their quest for answers and information. After all isn’t that why we search?

Related Resources

Search Engine Land: FAQ: All about the BERT algorithm in Google search https://searchengineland.com/faq-all-about-the-bert-algorithm-in-google-search-324193

MOZ: Google RankBrain: https://moz.com/learn/seo/google-rankbrain

Google: Content Quality Guidelines